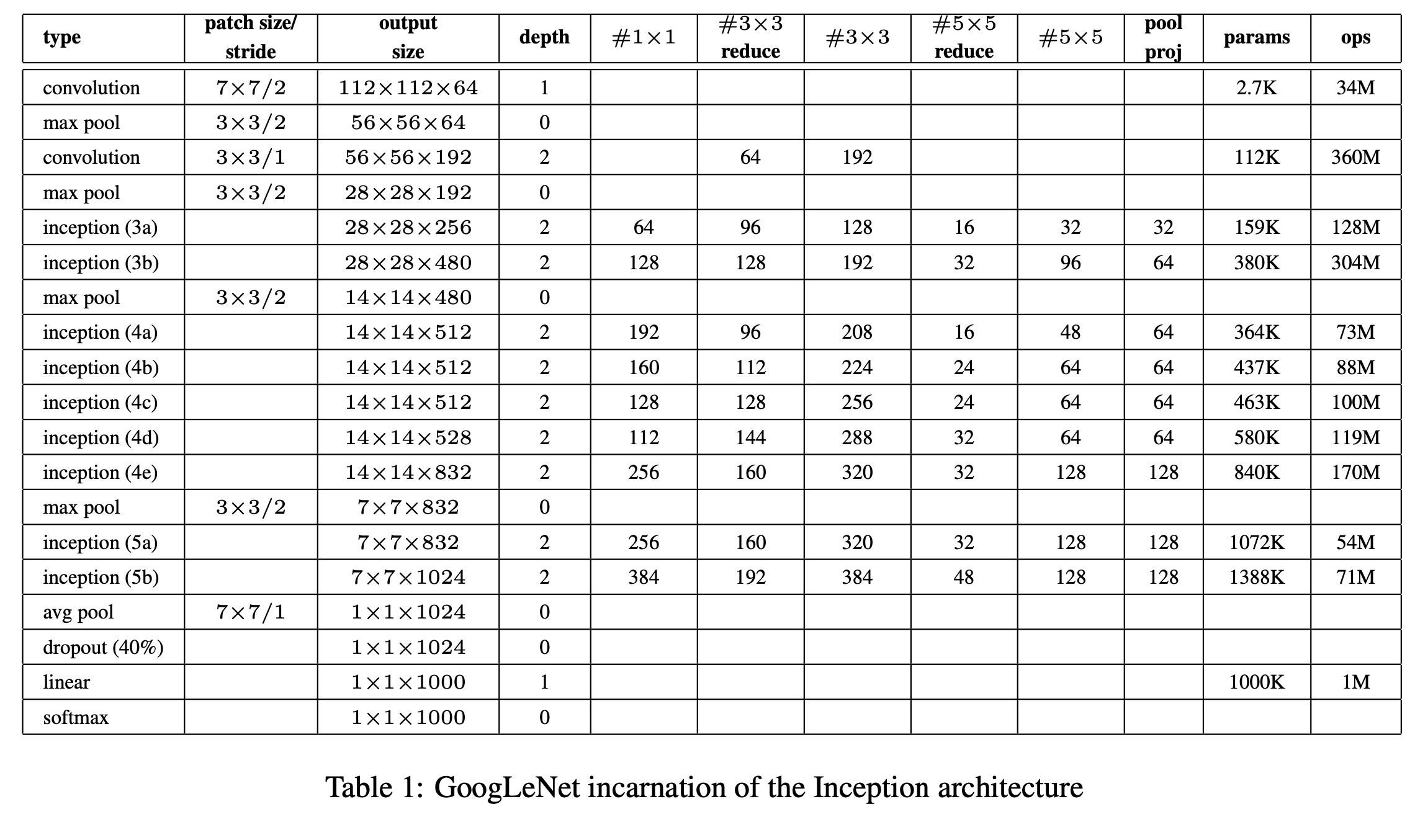

The particulars of the Inception network created was called the GoogLeNet, to pay homage to the work done by Yann LeCun.

The incarnation is shown both in the table 1 below:

Relu all activations use rectified linear units.

Practicality The network was designed with efficiency and practicality in mind, so they kept the memory footprint low, limiting the network to 22 parameterized layers. The overall number of layers is 100.

Average Pooling Empirically, they discovered that using average pooling over FC layers, they improved the top-1 accuracy by 0.6%.

Back Propagation Concerns They were concerned about the ability of the training to properly propagate gradients through the totality of the network, and included auxiliary classifiers for addition into the loss function during training. At inference, these classifiers are discarded.

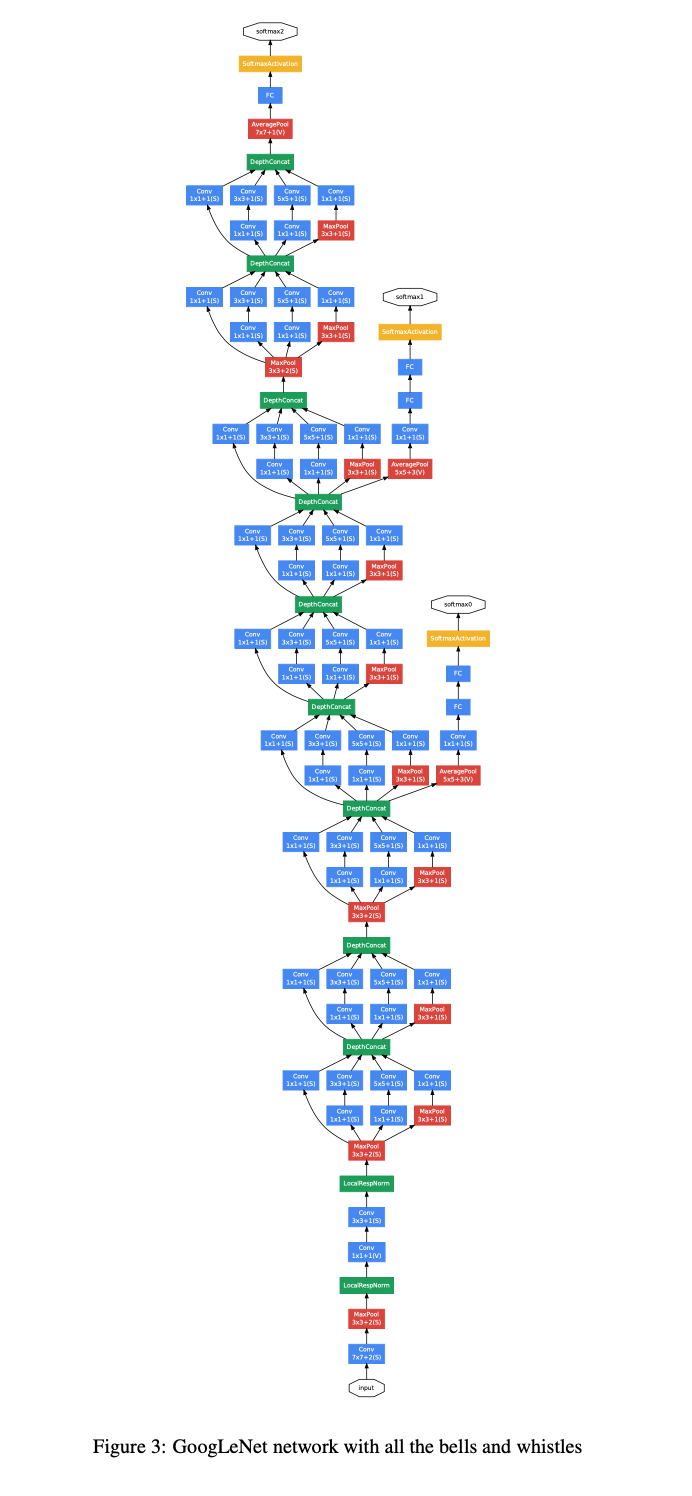

Below is the block diagram version of GoogLeNet.