2 Mishkin LSUV

Layer Sequential Unit Variance

I find it important to remind myself that unit variance only means having a variance equal to one.

The LSUV initialization method is an attempt to generalize the work done by Glorot to other non-linearities such as the work done by Kaiming on ReLUs. Importantly, they focus not on a singularly theoretical approach, which must be understood for each layer type, but they do focus on a data-driven weights initialization scheme.

An optimization is to only run the algorithm on the first mini-batch. The normalization has experientially been shown to be sufficient and is more efficient than full batch norm.

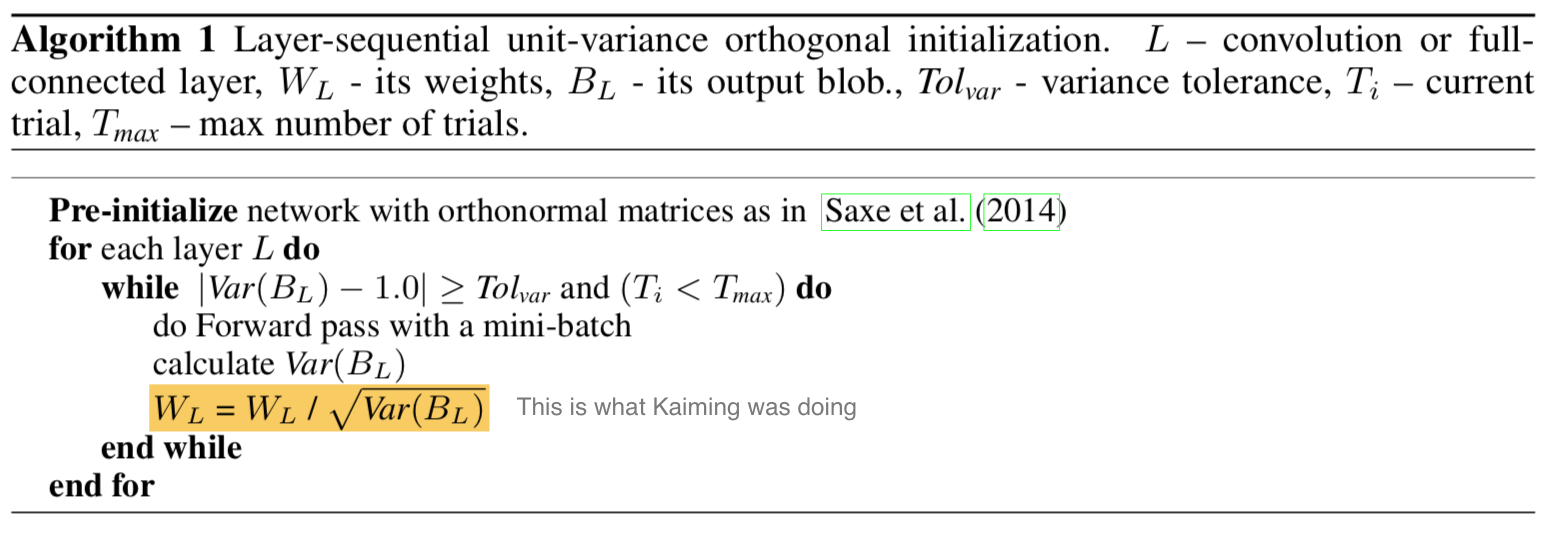

The algorithm below highlights its data driven approach with similar computation to Kaiming.