In Biology, both sexual and asexual reproduction are valid ways of creating organisms. However, all of the most complicated organisms utilize sexual reproduction. The reason believed for this is the inclination to favor genes who are able to operate with other genes, as opposed to co-adapted genes. Essentially, it appears the gene mechanism was optimized to favor robustness in genes.

Neural networks find many intuitions from natural biology, because firstly they were inspired by them, but also because we have a wealth of experience with them, while at the same time not yet being able to fully comprehend these complex realities. I think it is good to try to tie these complex ideas onto something we have studied relatively deeper.

Dropout works by forcing the hidden units of the network to be more robust in themselves. Dropout prevents a large co-adaption of the network, which reduces specialization and increases generality. It was found that a dropout probability of 0.5 is used, which is interesting given the idea that gene sharing takes half from each parent. Much of the original gene doesn’t directly contribute to the next.

Related work

Previous work from the context of Denoising Autoencoders (DAE), shows that adding noise to the inputs can produce a stronger network.

Description

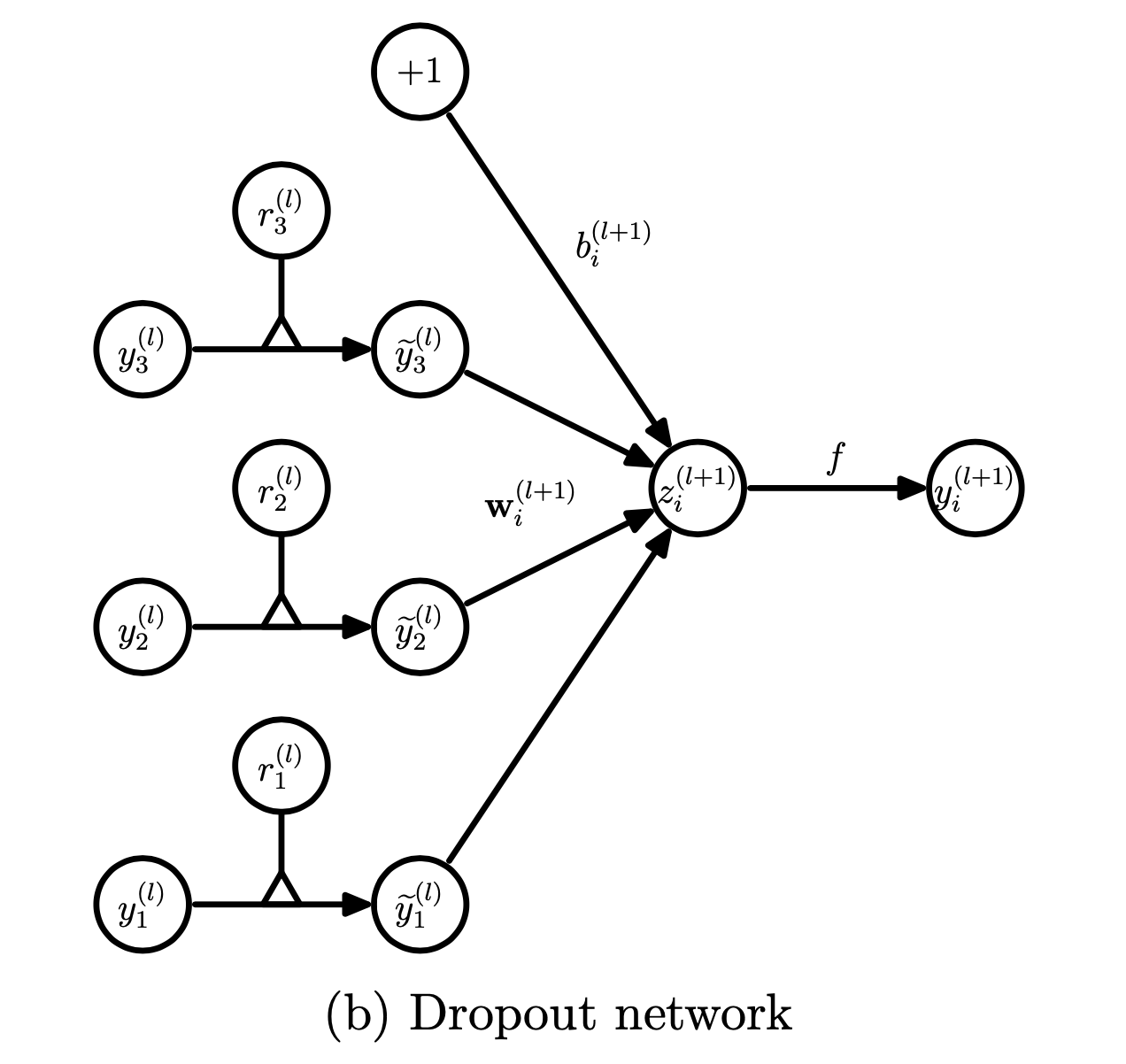

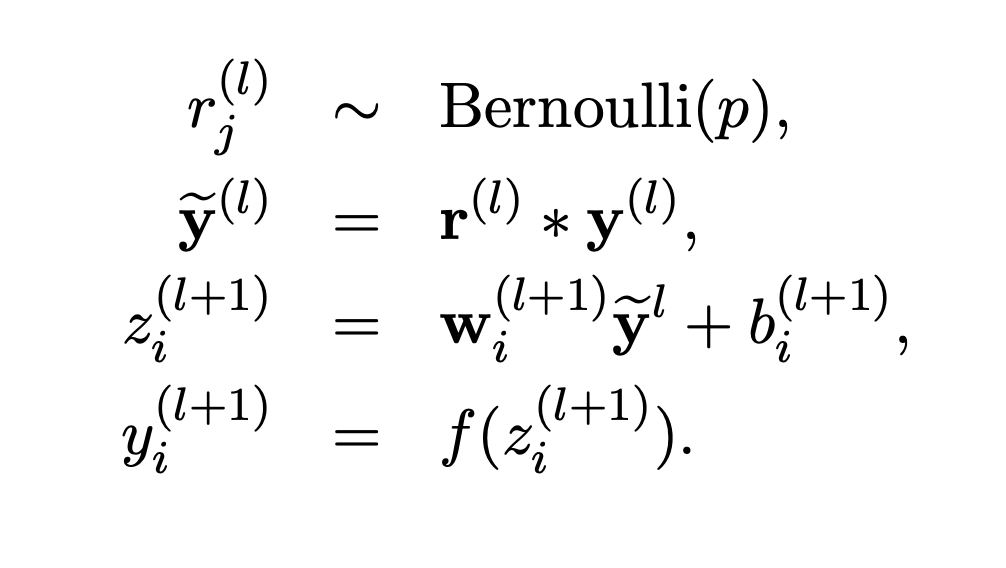

The specifics of dropout are pretty simple. Build a randomly populated binary vector r and logically AND it with previous output layers y such that the zeros in r will zero out the outputs in y. z is generated with normal w*x + b activations using thinned y.

Below you can see a graphic representation where r gates the y outputs.